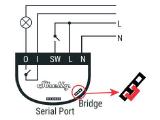

Automate your home with a Shelly 1 Wi-Fi module

·5 mins

I’m a bit conservative about automating my home because of the vendor lock-in or the requirement to have a central hub. Last Black Friday I came across the Shelly products. Shelly doesn’t require a central hub and only needs a Wi-Fi connection and there isn’t a vendor lock-in because the modules do have their own web interface and API. Interesting and I bought a couple of Shelly 1’s! In this article more about the Shelly 1 and how I use them to automate my front door and back door lights.